In 1971, on my first day of architecture

school, a professor said, "architecture is frozen music." I immediately

imagined liquid architecture and wondered how this might be realized. I had

studied the violin, photography, film, and video, and then began working with

multi-media installations as a way of freeing space and moving it in time. I

wanted to perform space and images the way a musician performs a musical instrument.I

had played the violin and moved from classical music to latin jazz, partly because

of the music itself, but also because of the social aspect of the oral traditions

informing it. I wanted to improvise moving images and spaces along with the

music. I imagined that all physical surfaces could be 3-dimensional screens,

semi-permeable membranes passing messages between interior spaces of the mind

and exterior spaces of the physical world, and between people communicating

in it.

In 1972, I traveled to Morocco from Denmark along with some friends of mine

who were expatriate American jazz musicians and poets. I was born in Denmark,

but spent 13 years in the United States, and as a result, I felt neither truly

at home in the United States nor in Denmark. I felt as though I too, was an

expatriate, only from 2 cultures. We had all moved a great deal in our lives,

and especially felt alienated from the Vietnam War which had become increasingly

brutal. It was a time of re-evaluation of our values and we rejected those that

would cause innocent people to suffer. We were misfits and as a result, nomads,

people with no fixed homes. We felt connected to the nomads of all cultures,

including those in Morocco, and considered the earth our real home and all people

our family.

I wanted to experience the architecture of the world and the living cultures

that shaped it with such rich histories and traditions. I wanted to explore

that which I did not know, challenge stereotypes, and form a more healthy relationship

to the world.

In Morocco, I had many wonderful experiences, but I want to tell you about a

specific one today. One of my fellow nomads was the poet and jazz musician Ted

Joans. Sometimes he would be inspired by something "in the air" or

something said or seen, and he would take out his trumpet and spontaneously

begin playing, right in the middle of an old town or medina.

Within what seemed like seconds, many people of all ages appeared from doorways

and around corners with small clay drums, and they would all play together,

filling the air with joy and energy. It filled our souls with the beauty of

the human spirit transformed into sound. Even though we came from different

cultures, we could transcend our differences when we played music together.

I thought to myself, if only we could do this with images and spaces, responding

to our physical and emotional gestures, too. If only we could use telecommunications

technology to bring together people from all over the world, along with their

rich cultural traditions, both visual and musical, and bring into being a global

visual music jam session.

With electronic cameras and imaging devices, microphones and electronic musical

instruments, it had become possible for the first time in history to perform

moving images as well as music in real-time. With digital technology, it would

also be possible to create and transform 3-D spaces in real-time, and share

memory and intelligence of organized information contained within it. With telecommunications

technology, it was possible to connect people all over the world with each other

and through these images, spaces and sounds, they would be able to play together.

This technology would be an instrument, not just a tool, affirming life rather

than destroying it. This was all possible, but it would take many years to realize,

especially considering the cost of technology and the poverty of so many around

the world. But even in Morocco, in many poor neighborhoods, I saw a great many

television antennas on top of houses. I felt it was imperative to democratize

new technology even more, and make it more inclusive of many cultures and traditions.

Music and visual arts and crafts were already close to the people, and so I

felt that the best way to create a dynamic, inclusive link between people and

technology was to work with those artforms in the context of new technology.

I began working towards this goal, step by step, beginning in 1972.

I began by exploring my own cultures

and subjective reality. If it was to be really inclusive of everyone's culture

and imagination, it had also to be inclusive of my own. I needed to find out

who I was, as a human being and a woman, first.

For the next 10 years, I worked in film and video, especially real-time video

synthesizers. By combining objective, photographic images, with subjective synthetic

images, I could begin to express emotional and intellectual states, informed

by dream and memory, and inspired by music.

I wanted to create a fluid continuum between interior and exterior, and between

sound and image. I worked with electronic music synthesizers, sensing and recording

devices, and linked them together using the patch programmable paradigms that

evolved from the early audio synthesizers designed by Robert Moog and Donald

Buchla. In those days we used oscilloscopes to produce raw dynamic visual images

from sounds. We also experimented with using electronic signals from video,

frequency shifted, as an audio source. But interesting sounds rarely produced

interesting images, and vice versa.

I came to use separately composed control signals to shape the generation of

both images and sounds. They could each be carefully considered and tuned independently,

while still sharing a fundamental, gestural link.

I also worked with early computer graphics systems in the mid seventies, but their limitations made them too problematic to use in real-time performance. Nonetheless, I continued to work with digital technology, and by the early 1980's I began teaching computer graphics to artists, and creating a series of stereoscopic computer generated images dealing with language, perception, and technology including this work, "Fish and Chips." (shown here in 2-D)

I continued to create installations and films that explored aspects of visual-music, which I consider poetry because it is associative, as well as my own cultural heritage. I pursued the integration of film, video, and 2 and 3-D computer graphics. My works during this time included Solstice, a series of multi-media works which explored an ancient pagan summer solstice ritual in contemporary Scandinavia, Concurrents and NLoops, multi-monitor computer-video installation and performance exploring visual relationships to polyrhythmic musical structures.

Between 1989 and 1993, I collaborated with computer scientist Phil Mercurio

at the San Diego Supercomputer Center to create a real-time stereoscopic animation

system.My stereoscopic work "Maya," also structured musically, is

inspired by the Hindu term for the conflict between illusion and reality. In

both NLoops and Maya, I collaborated with composer Rand Steiger. (These are

2-D stills, below, are from the stereoscopic animation, which is 7 1/2 minutes

long.)

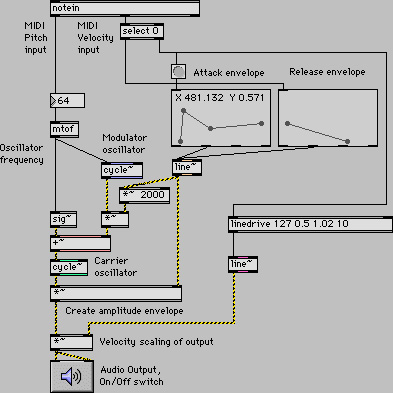

Ten years ago, I heard about software called "Max" that was part programming

language and part application, which was starting to be used widely in the computer

music community. Max transferred the patch programmable paradigm of electronic

musical devices to the computer for the generation of real-time music. It allowed

musicians to create their own applications quickly and intuitively, without

the limitations of software applications, or the time consuming complications

of programming languages.

I thought to myself, if only this could be extended to images and collaborative spaces. A few years later, I met Miller Puckette, the author of "Max." I asked him, "can you do this with images, too?" He said yes, he and others were already working on it. Max had been extended to include some limited graphics capabilities. But more exciting than this, he was embarking on the creation of a new package that would build on the strengths of Max, but would go further. "Pd", or "Pure Data", would consider all data the same, regardless of origin. Once transferred into the computer, data from any modality would be available for interaction and even transformation into any other.

Soon afterwards, Mark Danks, a student from Princeton came to study with Miller

and Rand, who were by then teaching at UC San Diego, and he began making GEM,

Graphics Environment for Multimedia, that would bring the full capabilities

of Open GL graphics into the Pd environment (example below). Shortly thereafter,

Dana Plautz visited me at USC, and she invited me to apply to the Intel research

Council.

I thought about the

approach I had developed in my old synthesizer days, when I used common control

voltages to link video images and electronic sounds. I thought that the same

approach could be used to link moving images and sounds separated by great distances.

One could send movement and gesture information between 2 (or more) computer

systems which would then use these signals to animate the locally stored images

and sounds. This would eliminate the need to send entire animation and sound

databases in real-time, which is a very slow process on most of our currently

available networks. In fact, control voltages linking separate audio and video

systems are a good metaphor for what can be sent over a network for linking

computers at separate sites.

In addition, this approach would allow for each site to make additions or modifications

to the animation and music by mixing in site specific data, with the potential

to customize it in real time with input from local performers. The multiple

sites could interact with each other, but each would also be free to create

their own local performance by mixing in any data in whatever way was wished.

Miller, Rand, and I then had detailed discussions about these and related issues,

and prepared a full proposal. We are very grateful that Intel chose to support

our project.In our work, we would have to address several big issues. The first

was to describe what a flexible real-time digital visual-musical or poetic multi-modal

system would do. We would have to further develop the software that Miller and

Mark had begun, and set up hardware systems to fully realize the potential of

the software. Then we would have to consider the questions of networking, and

develop a strategy to cope with network latency. Finally, we would have to test

out our system in a real-time setting with live musicians and artists, and eventually

stage simultaneous, linked performances in 2, and then an increasing number

of separate sites.

We are, of course, aware of the many experiments by others in the area of performance

and telecommunications. The technical paradigm we have adopted reaches for a

higher level of abstraction in the communications protocol, and a greater degree

of fluidity between modalities. But we share with many artists the desire to

enrich present and future telecommunications systems with the tools necessary

to make long distance artistic collaboration possible.

Pd and GEM allow us to integrate 2 and 3D computer graphics and animation, video,

video synthesis and image processing, audio synthesis and signal processing,

and networking, thereby converging electronic technology of preceding decades.

We are working on how to integrate the elements and parameters of these previously

disparate media, one step at a time. We are working to integrate tools which,

for example, sense physical and musical gestures, as well as analyzing sounds

and moving images.

While we have many tools for synthesizing multiple 2 and 3-D images and objects

in GEM, including colorizing, texture mapping and texture animation, hierarchical

object animation and transformation, particle systems, lighting, keying and

layering, image analysis is in its beginning stages. We have focused primarily

on music and sound analysis so far, and the integration of live video and animating

textures from multiple sources. We are developing a neural net application as

well, as an additional way of making associations between data.

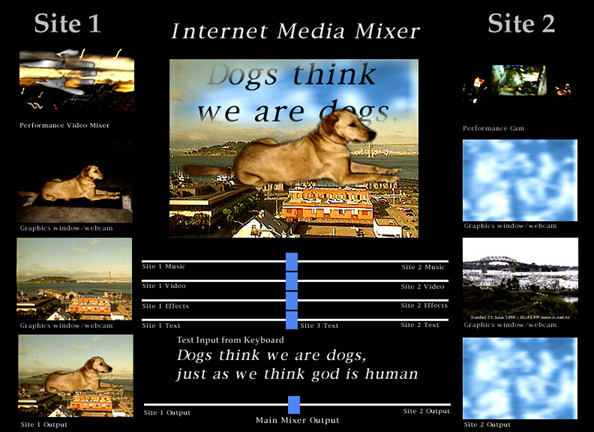

Miller has already created

objects for making network connections between computers running Pd, and we

are working on an object that will grab images from live webcams for real time

processing and display within GEM. The next stage in the area of web and networking

tools includes setting up Pd as a browser plug-in, with a specific application

that will function as a multi-media mixer that will allow users to mix and match

streaming audio and video from multiple sources on the internet. This way anyone

with a net connection can participate in our multiple site performances by making

their own mix of multi-media data coming in from the various sites involved.

Gradually, we hope to break down the distinction between broadcaster and receiver,

performer and audience, by having the full set of multi-media tools available

to all participants to both receive and send data, so that we can truly bring

about a global visual music jam session.

The bottom line is that the system be open and flexible, work in real-time,

and be freely and widely available. The reason for this is that the visual-musical

or other associations possible between modalities, are highly subjective and

vary widely. Rather than providing a fixed set of tools that assume universal

associative principles, we wish to provide the greatest flexibility possible

to allow for a wide variety of unique individual and cultural identities to

be shared in a global context.

We want to explore in greater depth the possibilities for creating subjective

relationships between modalities. We are interested in relationships such as

those that form the basis of "poetic" or associative thinking. Poetry

is visual-music and visual music is poetry, because it is associative. As the

late philosopher Alan Watts has stated, "The power of poetry comes from

its associative rather than logical qualities."

It is the richness of the associations and the fluid possibilities for further

interaction and association between multiple participants, that fascinates us.

This kind of poetic thinking is proliferating already as people engage it daily

through linked media on the world wide web. Because so many of our sense perceptions,

ideas and memories can flow fluidly across the digital continuum and continually

transform themselves non-linearly in real-time, what we are experiencing is

a kind of poetic collective consciousness, a kind of a shared lucid dream.

Our vision of networked,

improvised, multi-modal visual music, or liquid architecture or dynamic poetry,

is just one way of talking about a globally shared, lucid dream. Above all,

it is this dream of dreaming in the spaces that we share, that drives and informs

our research. While we are not yet there, we can see it clearly on the horizon.

Text and images

(c) 1998 Vibeke Sorensen